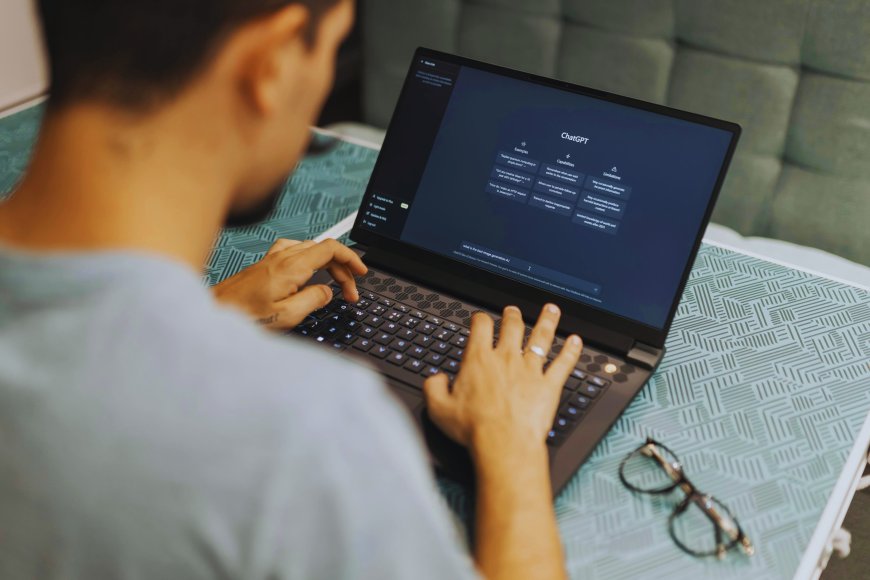

AI Integration Threatens Corporate Privacy as Employees Accidentally Share Business Critical Information

Corporate data security is at risk as employees unknowingly leak sensitive information through AI tools like ChatGPT, with shadow AI use exposing enterprises to major compliance, privacy, and data breach threats.

Employees are unknowingly exposing critical company information via generative AI tools such as ChatGPT, putting corporate data security at risk, based on the new research. According to LayerX Security's Enterprise AI and SaaS Data Security Report 2025, many employees put sensitive information into AI chatbots using personal or unmanaged accounts that are not secured by enterprise security measures.Within the report, ChatGPT accounts for 77% of all online AI tool usage, with around 18% of employees pasting corporate data into such applications.Surprisingly, more than half of these pastes contain critical company information, exposing firms to AI-related data breaches.

LayerX CEO cautioned that releasing company data into AI technologies could result in regulatory, compliance, and even geopolitical issues.When data enters public AI systems, it might be exploited for model training or fall into the wrong hands, increasing the danger of business espionage and data theft.

AI Tools Become the New Gateway for Corporate Data Leaks

Recent research reveals that AI tools have become the leading source of corporate-to-personal data leaks, accounting for 32% of all unauthorized data transfers. Nearly 40% of uploaded files contain personal or financial details, while 22% include sensitive regulatory information, posing serious risks for companies under GDPR, HIPAA, and SOX.The main cause is “shadow AI” that employees using personal accounts and unmanaged browsers to access tools like ChatGPT, Salesforce, and Microsoft Online. Around 71.6% of AI usage occurs outside official systems, with users copying and pasting sensitive data multiple times daily, often without realizing it. This simple yet risky behavior bypasses data loss prevention (DLP) tools and traditional firewall protections, creating a growing security gap in enterprise environments.

Shadow AI Creates Invisible Security Gaps

The rise of shadow AI is creating serious challenges for enterprise IT and security teams, as personal accounts used outside official systems make it nearly impossible to monitor or prevent unauthorized data leaks. Simple actions like copying and pasting into AI tools can unknowingly expose sensitive corporate data, code, or client information. This hidden data flow has become a compliance risk, especially for regulated industries such as finance, healthcare, and government, where even one unmanaged AI interaction could lead to heavy penalties and loss of trust. As dependence on generative AI tools like ChatGPT grows, organizations must strengthen data governance to balance convenience with control.

Empowering Organizations with Layered Defense Strategies for AI Security

To mitigate these threats, experts recommend that businesses implement a multi-layered AI security strategy that includes centralised access controls, browser and endpoint monitoring, secure AI APIs, and robust governance tools for tracking data usage. Employee awareness and training on safe AI techniques are equally crucial in preventing inadvertent disclosures. According to the LayerX analysis, AI usage exceeds enterprise security, opening up new possibilities for data breaches. As tools like ChatGPT become more integrated into daily operations, organisations need to find a balance between innovation and stringent data protection, ensuring that the same technology that pushes advancement does not become the greatest threat to corporate privacy and trust.

Information referenced in this article is from Esecurity